Over 91,000 attacks on AI infrastructure were documented in a synchronized attack on artificial intelligence deployment from October 2025 to January 2026. The security telemetry gathered from honeypot sensors worldwide validates the existence of two structured attacks targeting server-side request forgery vulnerabilities and probing large language model interfaces. The level of sophistication and organization of these attacks suggests that threat actors have prioritized AI deployment security as a key target.

Researchers tracking internet scanning activity noticed that attackers targeted exposed AI infrastructure, API integration, and model management interfaces. Unlike typical scanning attacks, AI infrastructure attacks were structured and optimized for data collection and potential exploitation.

Surge in AI Infrastructure Attacks Confirmed by Threat Intelligence Monitoring

Threat intelligence analysts have discovered over 91,000+ malicious sessions on distributed sensor networks, which is one of the most focused attacks on AI infrastructure to date. This information was gathered through a honeypot infrastructure designed to mimic vulnerable AI services and API endpoints.

Between October 2025 and early January 2026, malicious actors conducted targeted probing of AI infrastructure across geographically diverse regions. This data indicates that malicious actors are no longer probing AI attack surfaces but are instead actively exploiting them in reconnaissance and exploitation attacks. Two distinct campaigns have been identified based on this data:

- A structured SSRF attack campaign targeting outbound request mechanisms

- A massive-scale LLM endpoint reconnaissance attack targeting commercial API compatibility

Both of these campaigns illustrate a new focus on the systematic exploitation of AI deployment security vulnerabilities.

WordPress Web Hosting

Starting From $3.99/Monthly

The SSRF Campaign: Leveraging Model Pull and Webhook Integrations

The initial wave of AI infrastructure attacks centered on server-side request forgery vulnerabilities introduced into AI deployment attack surfaces. SSRF enables malicious actors to coerce a server into making unauthorized outbound requests, effectively leveraging the compromised infrastructure as a proxy for reconnaissance or validation of callback attacks.

The attack campaign targeted two main attack vectors:

Ollama Model Pull Abuse

Attackers injected malicious registry URLs into deployments of Ollama, exploiting its model pull functionality. By manipulating model source locations, adversaries triggered outbound HTTP requests toward attacker-controlled infrastructure.

This technique confirms that AI infrastructure attacks are increasingly targeting model supply-chain mechanics rather than only API endpoints. Because many AI operators allow dynamic model fetching, insufficient validation of registry URLs creates a direct SSRF exposure point.

2. Twilio Webhook Manipulation

Twilio integrations were also abused by altering MediaUrl parameters within SMS webhook configurations. This forced backend systems to initiate outbound requests to malicious domains.

Cheap VPS Server

Starting From $2.99/Monthly

The SSRF campaign peaked during the Christmas period, generating 1,688 sessions within a 48-hour window. Analysts identified 62 source IP addresses across 27 countries, strongly indicating VPS-based automation rather than traditional botnet infrastructure.

A notable detail was the repeated use of ProjectDiscovery’s OAST callback infrastructure. OAST frameworks are typically used for security testing, but in this context, they were leveraged to validate successful SSRF callbacks at scale. This suggests that grey-hat operators or bug bounty participants may be running industrialized scanning operations.

Large-Scale LLM Endpoint Reconnaissance: 80,469 Sessions in 11 Days

The second and more alarming campaign began on December 28, 2025, targeting exposed large language model endpoints. Over eleven days, two dedicated IP addresses orchestrated 80,469 sessions probing AI services for misconfigurations and proxy exposure.

Attackers tested compatibility across both OpenAI-style and Google Gemini API formats, systematically probing models including:

- GPT-4o

- Claude

- Llama

- DeepSeek

- Gemini

- Mistral

- Qwen

- Grok

Probes used innocuous queries such as “How many states are there in the United States?” to avoid triggering anomaly detection systems. This fingerprinting approach allowed attackers to identify responsive models without generating obvious malicious payload signatures.

Windows VPS Hosting

Remote Access & Full Admin

The infrastructure behind this campaign links to IP addresses previously associated with CVE exploitation activity, totaling more than 4 million sensor hits across historical tracking datasets. The operational discipline suggests reconnaissance feeding into larger monetization or access-broker pipelines.

These findings confirm that AI infrastructure attacks now include systematic LLM endpoint reconnaissance as a core tactic. (Check out Hacked Website Repair)

Why is AI Deployment Security Now a Prime Target?

AI infrastructure presents a uniquely attractive attack surface for several reasons:

- AI services often run with high API privileges

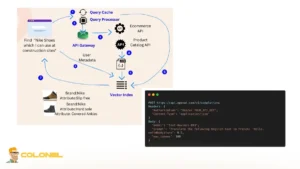

- Model endpoints are frequently exposed through reverse proxies

- Developers prioritize functionality over hardened network egress controls

- Model pulling and webhook integrations expand outbound connectivity

Unlike traditional web applications, AI deployments combine API gateways, model registries, third-party integrations, and automated outbound requests. This layered complexity increases the likelihood of misconfiguration.

As AI deployment security matures, attackers are clearly adapting faster than many organizations can implement defensive controls.

“GreyNoise observed over 91,000 attack sessions targeting AI infrastructure between October 2025 and January 2026, revealing coordinated reconnaissance and SSRF exploitation campaigns.”_GreyNoise

Indicators That Your Environment May Be Targeted

Organizations operating AI infrastructure should monitor for the following signs of active exploitation:

- Unexpected outbound HTTP requests from model pull services

- DNS lookups toward OAST-related callback domains

- High-frequency probing of LLM endpoints from limited ASN clusters

- Repeated low-complexity API queries across multiple model families

- Rapid OpenAI-compatible and Gemini-format request switching

These behaviors strongly correlate with the AI infrastructure attacks observed between October 2025 and January 2026.

Defensive Measures to Mitigate AI Infrastructure Attacks

The wave of more than 91,000 AI infrastructure attacks highlights an urgent need for practical, layered defenses. Because the observed campaigns combined SSRF exploitation with systematic LLM endpoint reconnaissance, organizations must strengthen both outbound controls and API-level protections. AI deployment security can no longer focus solely on inbound filtering; outbound abuse has become a primary risk vector.

Below are focused defensive actions aligned with the tactics documented in these campaigns.

Enforce Strict Model Pull Restrictions

Unrestricted model pull functionality increases exposure to SSRF abuse. Organizations should limit external model registries using allowlists and disable dynamic model fetching in production environments whenever possible.

Treat model sources as part of the software supply chain. Restricting where AI systems can fetch models significantly reduces the attack surface exploited in recent AI infrastructure attacks.

Implement Egress Filtering

Because SSRF attacks rely on forced outbound callbacks, egress filtering is critical. Outbound HTTP and HTTPS traffic from AI services should be restricted to approved destinations only.

Blocking known callback validation domains at the DNS level and monitoring unusual outbound traffic patterns can immediately disrupt SSRF-based exploitation attempts.

Apply Intelligent Rate Limiting

The LLM reconnaissance campaign relied on high-volume but low-noise probing. Basic rate limiting may not detect this activity.

Organizations should apply per-IP and per-API key rate controls, while monitoring for repetitive low-complexity prompts across multiple model endpoints. Behavioral baselining is essential to detect reconnaissance without obvious malicious payloads.

Harden API Gateways and Reverse Proxies

AI deployments frequently expose model endpoints through reverse proxies. These gateways must enforce strict authentication and avoid revealing model metadata through verbose error responses.

Internal LLM services should never be directly internet-facing. Limiting exposure reduces the likelihood of automated enumeration.

Monitor for Multi-Model Probing Patterns

The second campaign demonstrated systematic testing across OpenAI-compatible and Gemini-style API formats. Security teams should alert on rapid model switching attempts and repeated cross-family queries.

Such patterns are strong indicators of reconnaissance feeding into broader AI infrastructure attacks.

Strategic Implications for the AI Ecosystem

The 91,000+ recorded AI infrastructure attacks demonstrate a turning point in how threat actors perceive artificial intelligence systems. AI services are no longer experimental targets; they are now integrated into professional reconnaissance workflows.

The operational maturity of these campaigns indicates that reconnaissance data may feed into credential harvesting, API key resale markets, or lateral movement operations within enterprise environments.

Organizations deploying LLM services (Check Colonelserver LLM VPS), model hosting platforms, or AI-integrated applications should treat AI infrastructure attacks as an ongoing, industrialized threat category rather than isolated scanning events.

Frequently Asked Questions

What are AI infrastructure attacks?

AI infrastructure attacks refer to coordinated exploitation attempts targeting AI deployments, including SSRF abuse, exposed LLM endpoints, and misconfigured API integrations.

Why are LLM endpoints being targeted?

LLM endpoints often expose commercial AI APIs or internal models that can be abused for data access, API key theft, or unauthorized compute usage.

How does SSRF impact AI deployments?

SSRF allows attackers to force AI servers to initiate outbound connections, potentially exposing metadata services, internal APIs, or validating callback infrastructure.

Were commercial AI providers directly breached?

Current evidence indicates reconnaissance of exposed or misconfigured deployments rather than confirmed breaches of major AI providers.

What is the most effective immediate mitigation step?

Implementing strict egress filtering and rate limiting on AI endpoints provides the fastest reduction in exposure.